LLMeBench

Benchmarking LLMs

The purpose of this project is to address the growing need for systematic evaluation of Large Language Models (LLMs) in performing standard NLP tasks and comparing them to state-of-the-art methods. While LLMs have demonstrated impressive capabilities across a wide range of information and authoring tasks, the process of benchmarking their performance is often time-consuming, costly, and complex.

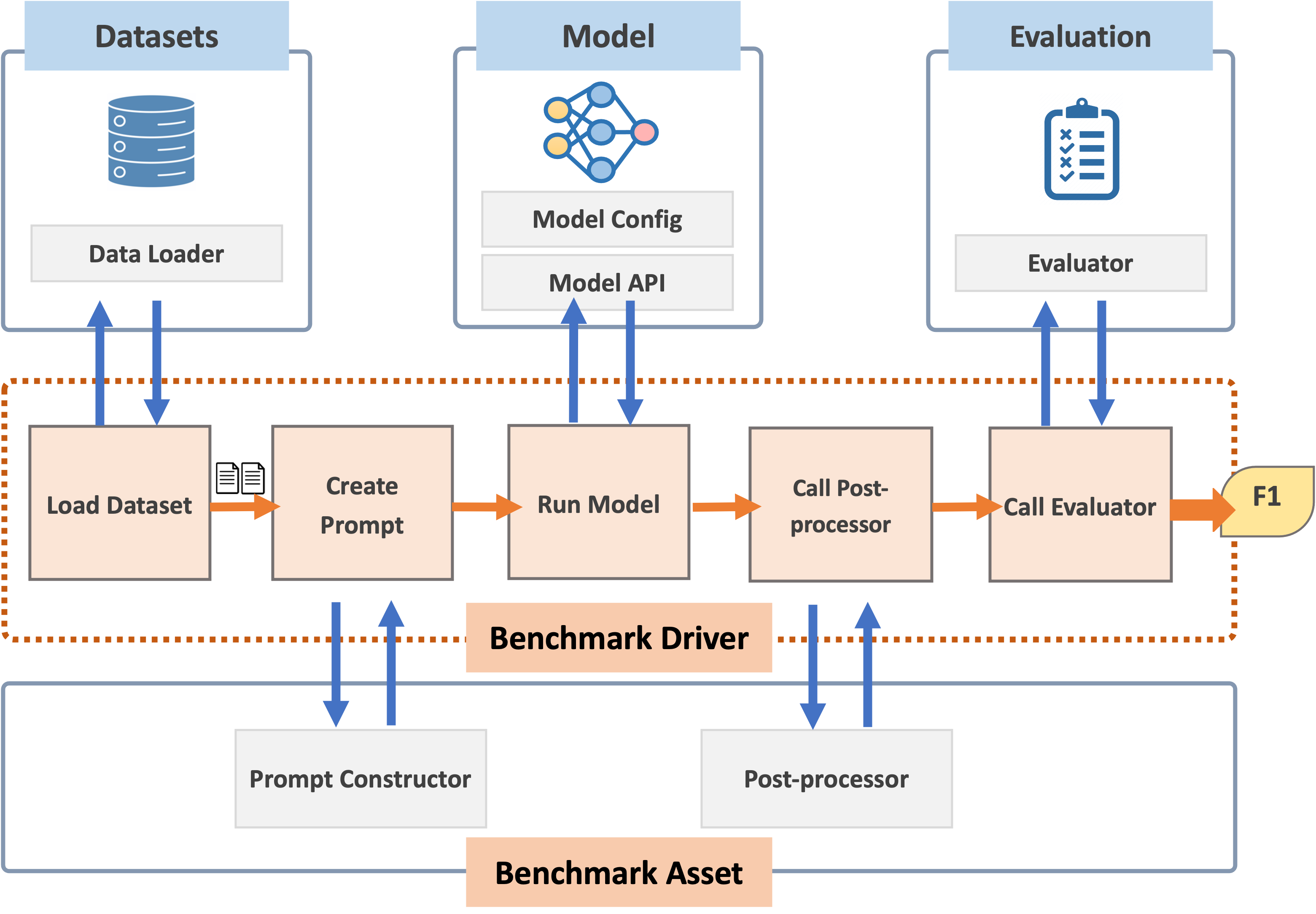

To overcome these challenges, we are introducing LLMeBench (LLM Effectiveness Benchmarking), a flexible framework designed to simplify and streamline the evaluation process. LLMeBench provides researchers, developers, and both experts and non-experts with an easy-to-use, plug-and-play solution for assessing LLMs on various NLP tasks. Its user-friendly design allows for seamless integration into existing experimental workflows, enabling efficient model comparisons and the incorporation of new tasks. The ultimate goal of this project is to make the benchmarking of LLMs more accessible and efficient, thereby advancing the field of NLP research and development.

Please follow our git repo LLMeBench.